Performance max asset a/b testing: how to run experiments and optimize your google ads creatives

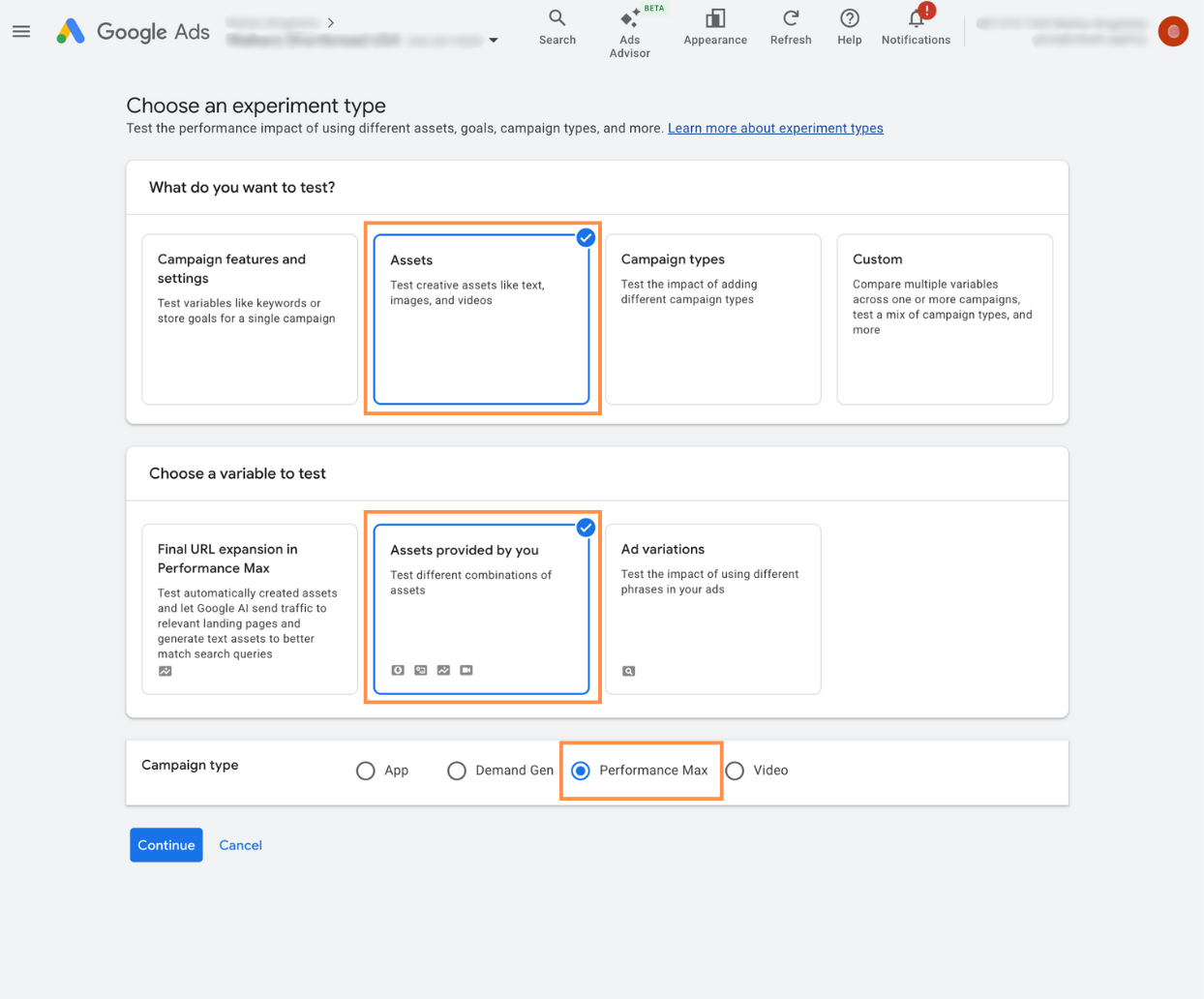

For the first time, Google is rolling beyond “asset-level signals” and into something closer to real A/B testing inside PMax: controlled experiments between asset sets within a single asset group, with a defined traffic split and statistical guidance.

On paper it sounds like a small UX update. In practice, it nudges PMax a bit closer to how performance marketers actually work.

Why this matters more than “just another beta”

Anyone who has run PMax at scale has felt the same tension: Google insists on bundling everything into one black box, while our work depends on isolating variables.

Until now, creative “testing” in PMax has been largely inferential:

- Asset reports with delayed, aggregated signals

- Performance labels like “Low”, “Good”, “Best” with no clear counterfactual

- Changes to asset groups that quietly reset learning and muddy attribution

You could approximate tests by duplicating campaigns or asset groups, but then you were also changing inventory access, auction dynamics, and sometimes budgets and bid strategies. In other words: you weren’t testing just creatives, you were testing different systems.

The new asset A/B experiments finally separate creative comparison from structural changes. Same campaign, same asset group, same settings – only the creative set differs. That’s a much cleaner experiment design.

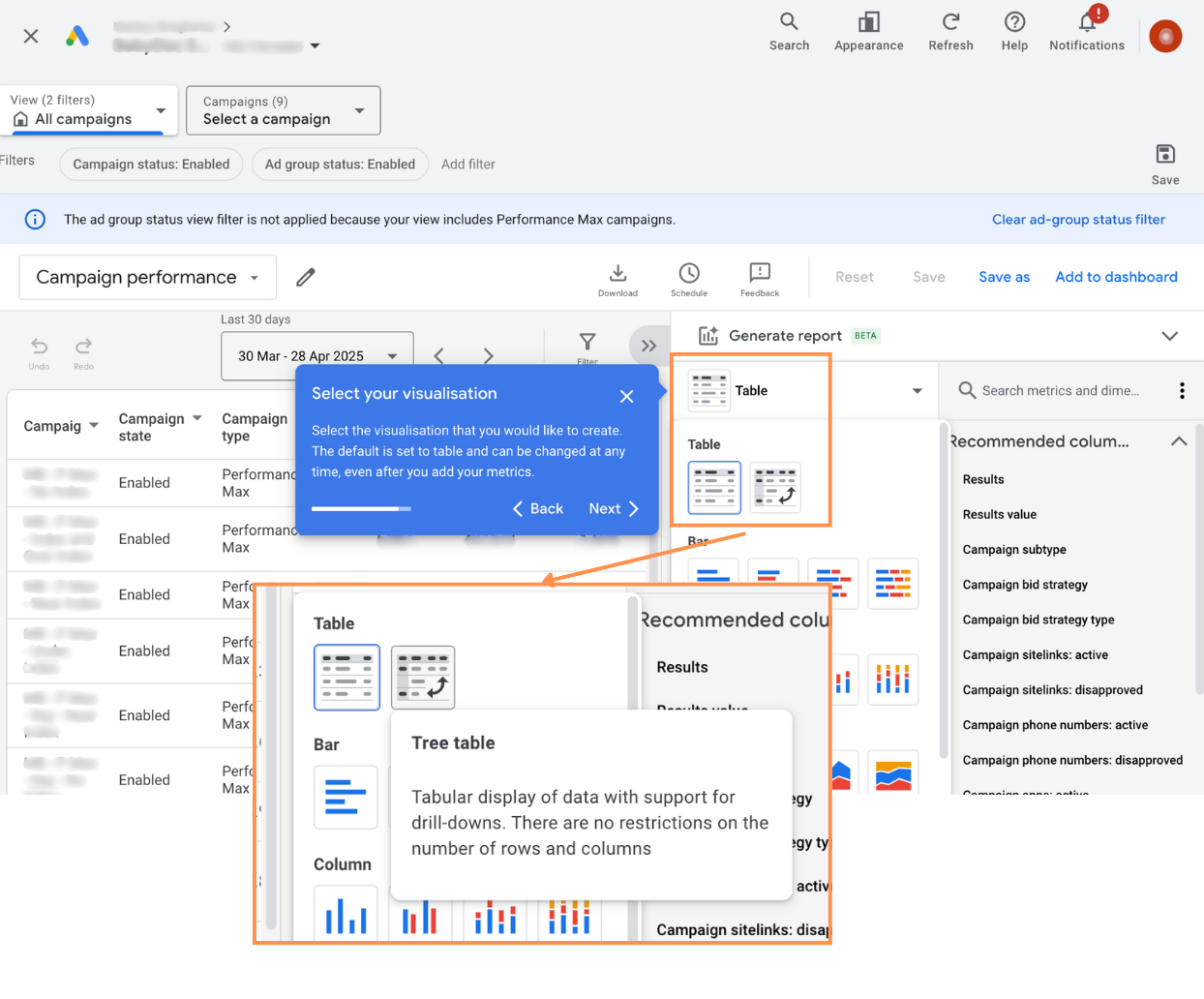

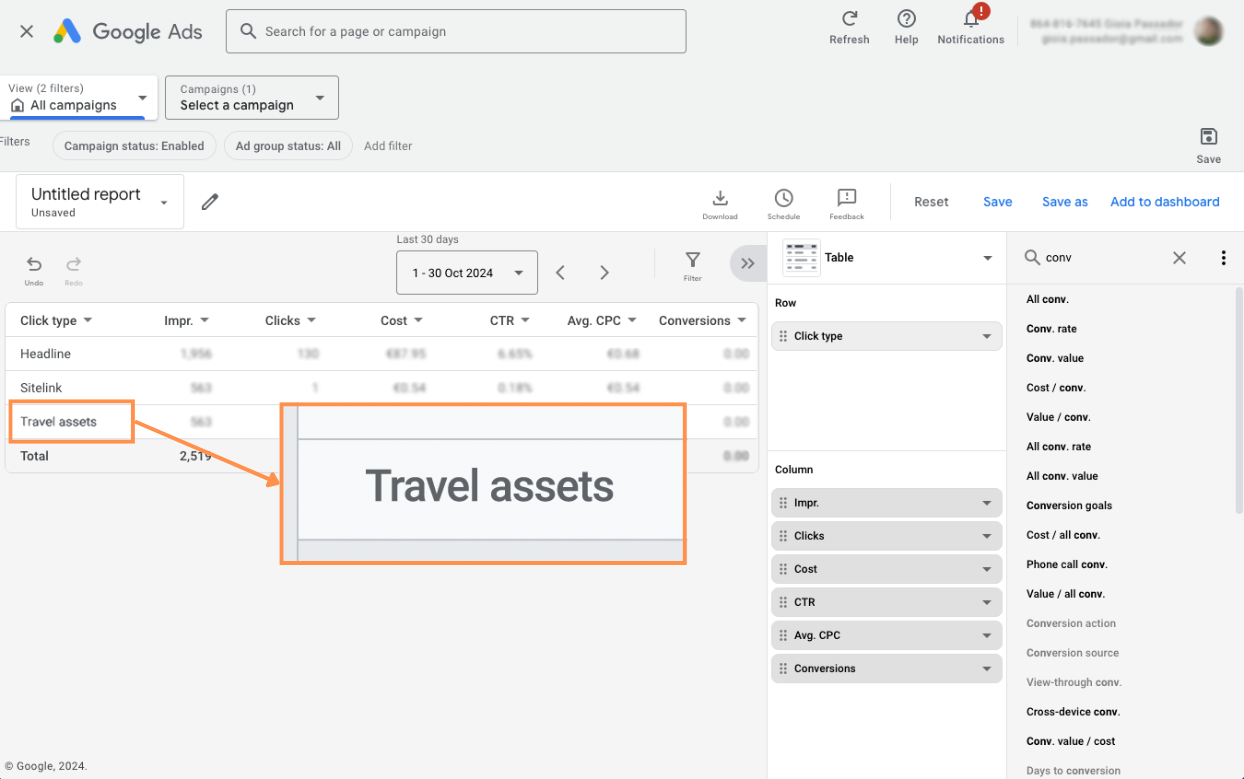

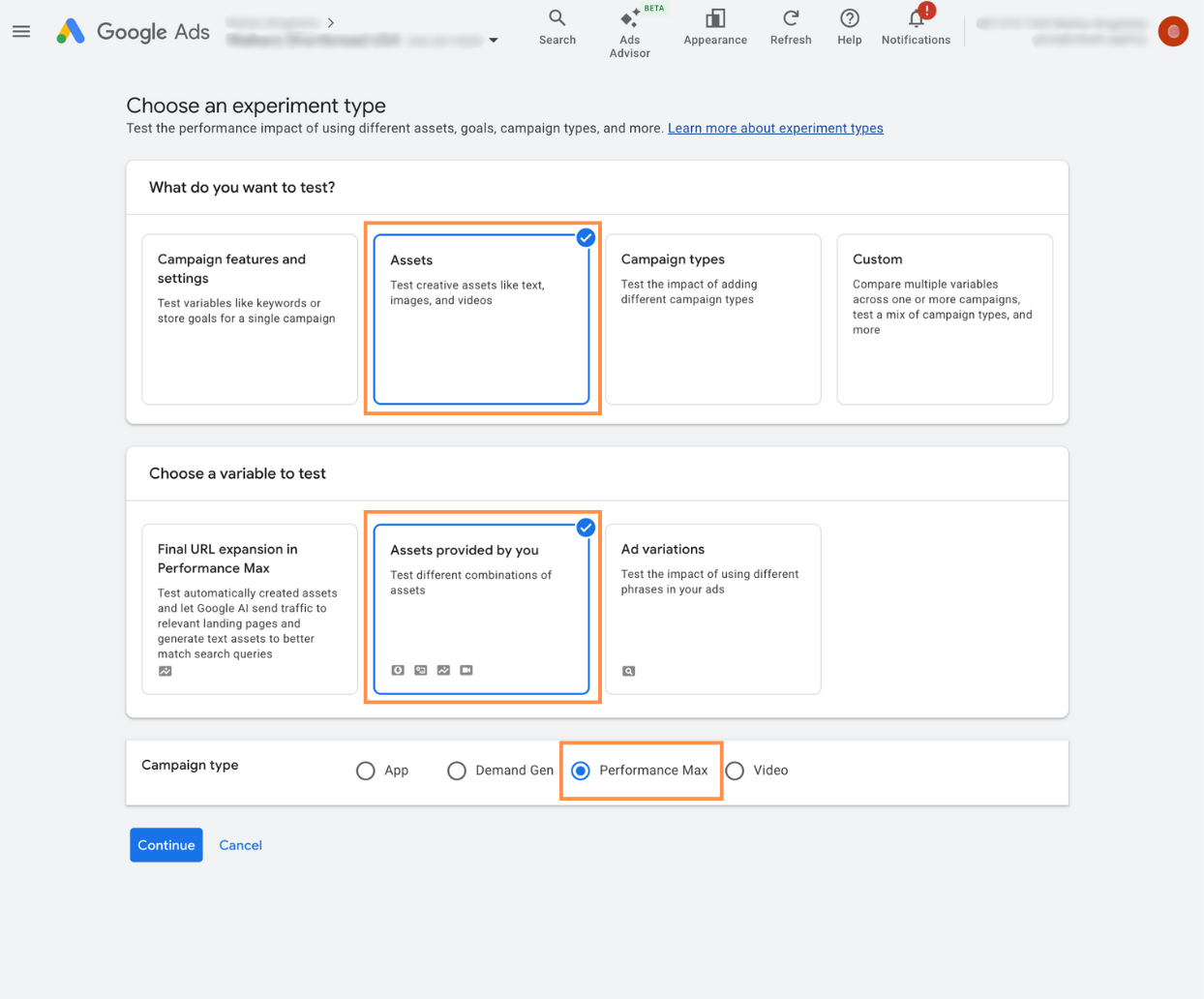

What Google is actually giving us

The mechanics are straightforward but strategically important:

You pick a Performance Max campaign and one asset group. Within that asset group you define:

- Assets A: the control – your currently live creative set

- Assets B: the treatment – new or alternative creatives

- Common assets: everything that continues to serve in both variants

You then set a traffic split between A and B, and Google runs a structured experiment over a recommended minimum of 4–6 weeks. The asset group is locked during the test, and all the usual limits and policy checks still apply.

The key point is that this happens within a single asset group. That’s where the value lies. You’re no longer forced to choose between “clean test” and “clean campaign structure” – you can keep your existing PMax architecture and still run disciplined creative experiments.

Creative strategy in a PMax world: what changes?

The introduction of asset experiments doesn’t transform PMax into a classic multi-ad-group search campaign. The system is still heavily automated, and you still don’t get the level of control you have in manual search. But it does shift how we can approach creative strategy.

Three practical implications stand out.

1. You can finally test hypotheses, not just variations

Previously, creative iteration in PMax often devolved into “let’s add more assets and hope the system sorts it out.” With structured A/B tests, you can design hypotheses with intent.

For example, instead of randomly swapping headlines, you can test:

- Direct response vs. value narrative (e.g. “Get 30% Off Today” vs. “Built To Last 10+ Years”)

- Product-led vs. problem-led visuals

- Brand-heavy vs. performance-heavy messaging

Because A and B share the same underlying setup, differences in performance are more plausibly attributed to creative direction rather than structural noise. That’s a meaningful upgrade for anyone trying to align PMax with broader brand and messaging strategy.

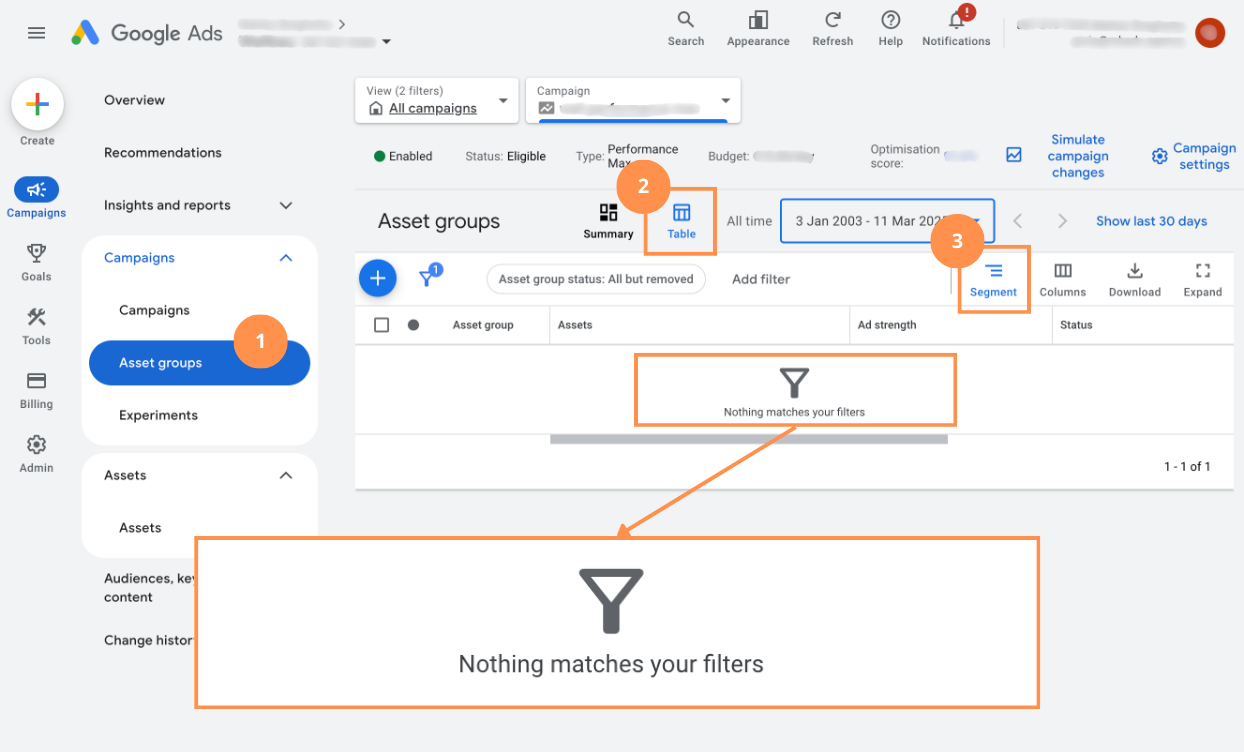

2. It forces discipline on asset groups

The “one asset group per experiment” limit will frustrate some people, but it’s actually a helpful constraint. It pushes you to treat asset groups as coherent entities rather than dumping grounds.

If an asset group is a mix of audiences, product categories, and intents, your creative test will be noisy by definition. The experiment beta makes that problem more visible. To get clean reads, you’ll want asset groups that:

- Represent a reasonably consistent product or service cluster

- Address a defined intent or customer segment

- Aren’t being constantly reconfigured mid-flight

In other words, the feature quietly rewards well-structured PMax setups and penalizes chaos.

3. It reframes “creative fatigue” inside PMax

One of the more opaque aspects of PMax has been understanding when creative fatigue is real versus when the system is simply reallocating impressions based on auction conditions.

With asset experiments, you gain a more grounded way to evaluate whether “fresh” creative actually moves the needle. If variant B consistently outperforms control A over several weeks with a stable traffic split, you have stronger evidence that performance shifts are creative-driven rather than just seasonal or competitive.

Limitations worth respecting

It’s tempting to celebrate this as “PMax finally doing testing right.” It’s progress, but there are still constraints that matter for how you use it.

First, the asset group lock during the test is more than a minor operational detail. It means you need to time experiments around merchandising changes, seasonal pushes, and feed updates. Launching a test right before a major promotion or price change will contaminate your results, and you can’t tweak the asset group mid-flight to compensate.

Second, the recommended 4–6 week duration is a reminder that PMax is still a multi-surface, multi-signal system. Short, aggressive tests may not give the algorithm enough time to settle into representative patterns, especially for lower-volume accounts. This is closer to running brand lift than to quick headline tests in standard search.

Third, the fact that disapproved assets simply don’t serve may skew perceived performance if you’re not watching closely. If one variant ends up with fewer active creatives due to policy issues, you’re no longer comparing like with like – you’re comparing a richer asset set to a constrained one.

Finally, this is still Google’s statistical framework, not a sandbox for your own methodology. You get guidance, not raw log-level data. For most advertisers that’s acceptable; for analytically demanding teams, it will feel like progress but not transparency.

Where this leaves PMax in the broader Google Ads ecosystem

If you zoom out, asset A/B experiments are another small but telling step in the reconciliation between automation and control.

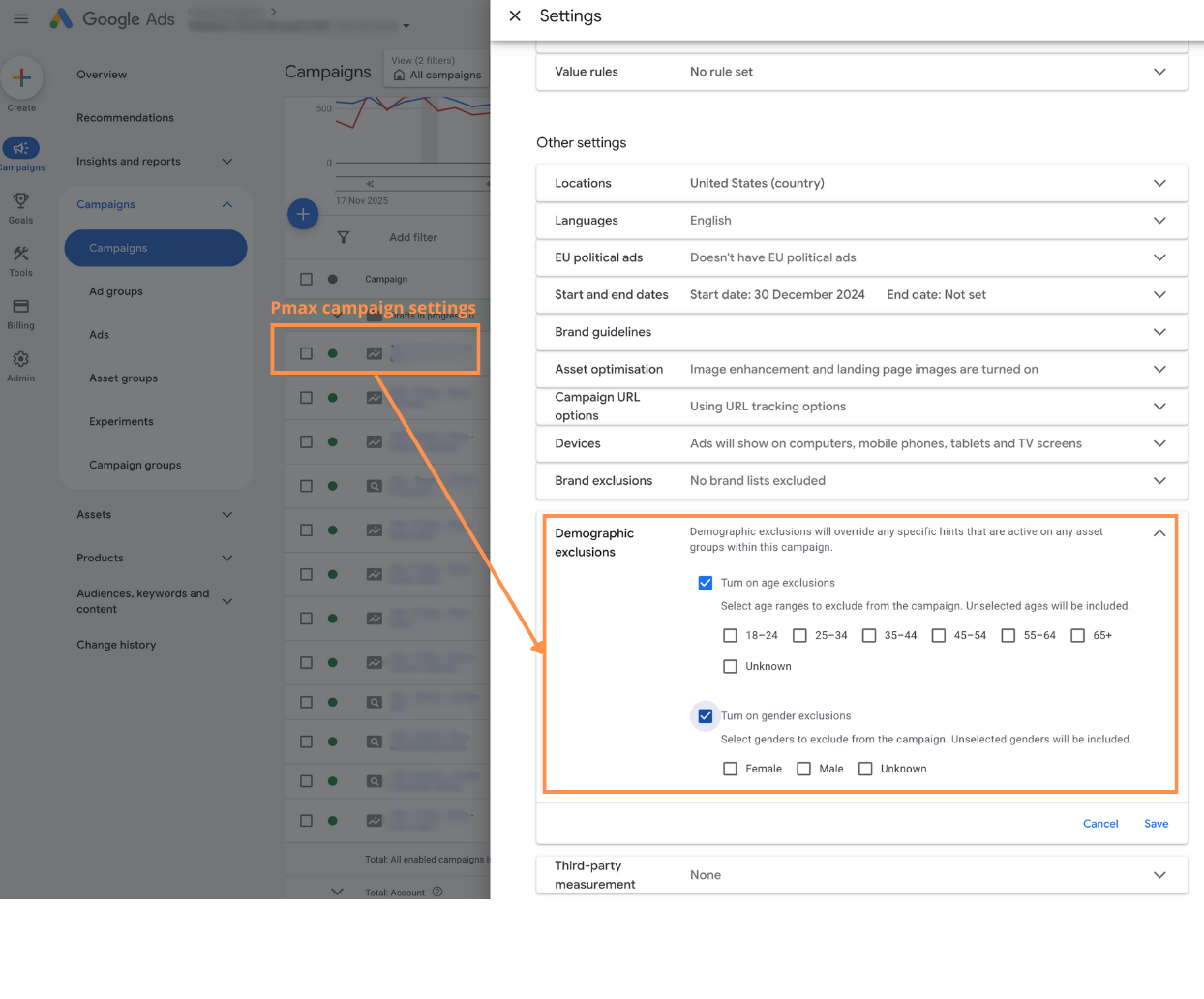

Search is moving toward broad match + smart bidding + RSAs, but we still retain levers for query control and ad testing. PMax has been at the extreme end of automation, asking advertisers to trust the system with almost everything. Features like search term insights, brand exclusions, and now asset experiments suggest Google recognizes that fully opaque automation doesn’t align with how serious advertisers operate.

For practitioners, the opportunity is less about playing with a new toggle and more about rethinking how creative strategy plugs into PMax:

- Use experiments to validate creative territories, not just micro-variants

- Align PMax tests with messaging decisions across search, social, and landing pages

- Treat experiment results as directional inputs into broader brand and performance strategy

PMax is still not a precision instrument. But with structured creative experiments, it becomes a more legitimate environment for learning, not just scale.

The interesting work now is to see how far we can push that learning before the next layer of automation arrives.

As a Google Ads expert, I bring proven expertise in optimizing advertising campaigns to maximize ROI.

I specialize in sharing advanced strategies and targeted tips to refine Google Ads campaign management.

Committed to staying ahead of the latest trends and algorithms, I ensure that my clients receive cutting-edge solutions.

My passion for digital marketing and my ability to interpret data for strategic insights enable me to offer high-level consulting that aims to exceed expectations.

Google Partner Agency

We're a certified Google Partner Agency, which means we don’t guess — we optimize withGoogle’s full toolkit and insider support.

Your campaigns get pro-level execution, backed by real expertise (not theory).

4.9 out of 5 from 670+ reviews on Fiverr.

That’s not luck — that’s performance.

Click-driven mind

with plastic-brick obsession.

We build Google Ads campaigns with the same mindset we use to build tiny brick worlds: strategy, patience, and zero tolerance for wasted pieces.

Data is our blueprint. Growth is the only acceptable outcome.